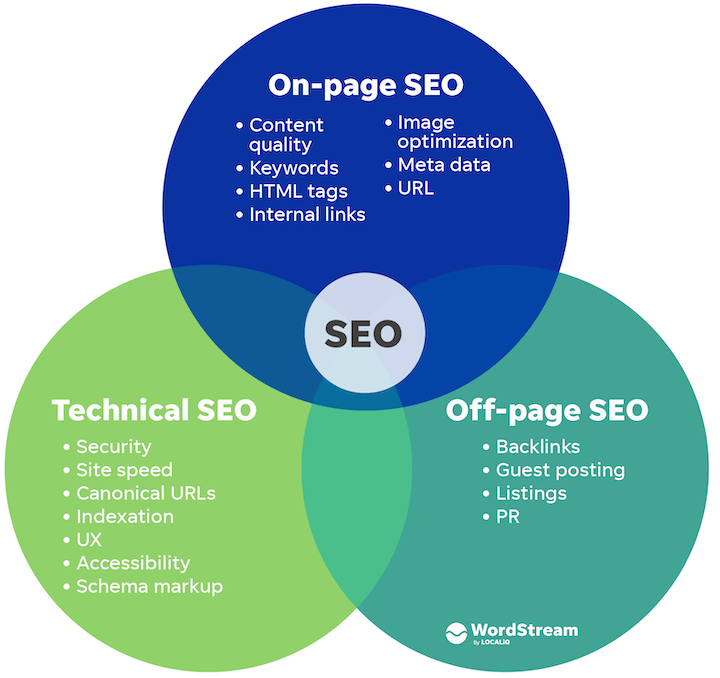

On-page SEO, off-page SEO, and technical SEO can be thought of as three pillars of organic search engine optimization. Out of the three, technical SEO is most often ignored, most likely because it’s the trickiest to master. However, with the competition in search results now, marketers cannot afford to shy away from the challenges of technical SEO—having a site that is crawlable, fast, and secure has never been more essential to ensure your site performs well and ranks well in search engines.

Because technical SEO is such a vast topic (and growing), this piece won’t cover everything required for a full technical SEO audit. However, it will address six fundamental aspects of technical SEO that you should be looking at to improve your website’s performance and keep it effective and healthy. Once you’ve got these six bases covered, you can move on to more advanced technical SEO strategies.

What is a technical SEO audit?

Technical SEO involves optimizations that make your site more efficient to crawl and index so Google can deliver the right content from your site to users at the right time. Here are some things that a technical SEO audit covers:

- Site architecture

- URL structure

- The way your site is built and coded

- Redirects

- Your sitemap

- Your Robots.txt file

- Image delivery

- Site errors

Technical SEO vs. on-page SEO vs. off-page SEO

Today, we’ll be reviewing the first six things you should check for a quick technical SEO audit.

If you’re looking for help with the other types of SEO, we’ve got posts for that!

- Our 10-step SEO audit provides both technical SEO as well as content optimizations.

- Our on-page SEO checklist covers everything you need for blog posts and pages.

Technical SEO audit checklist

Here are the six steps we’ll be covering in this technical SEO audit guide:

- Make sure your site is crawlable

- Make sure your site is indexable

- Review your sitemap

- Ensure mobile-friendliness

- Check page speed

- Duplicate content

Let’s begin!

RELATED: The Complete Website Audit Checklist (in One Epic Google Sheet)

1. Make sure your site is crawlable

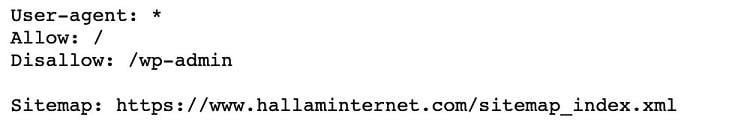

There’s no use writing pages of great content if search engines cannot crawl and index these pages. Therefore, you should start by checking your robots.txt file. This file is the first point of call for any web-crawling software when it arrives at your site. Your robots.txt file outlines which parts of your website should and should not be crawled. It does this by “allowing” or “disallowing” the behavior of certain user agents.

To find your robot.txt file, simply go to yourwebsite.com/robots.txt

The robots.txt file is publicly available and can be found by adding /robots.txt to the end of any root domain. Here is an example for the Hallam site.

We can see that Hallam is requesting any URLs starting with /wp-admin (the backend of the website) not to be crawled. By indicating where not to allow these user agents, you save bandwidth, server resources, and your crawl budget. You also don’t want to have prevented any search engine bots from crawling important parts of your website by accidentally “disallowing” them. Because it is the first file a bot sees when crawling your site, it is also best practice to point to your sitemap.

You can edit and test your robots.txt file with Google’s robots.txt tester.

Here, you can input any URL on the site to check if it is crawlable or if there are any errors or warnings in your robots.txt file.

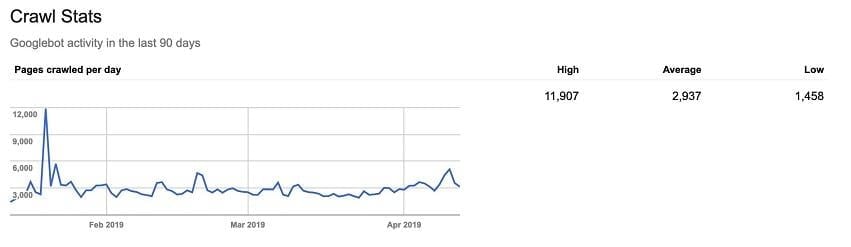

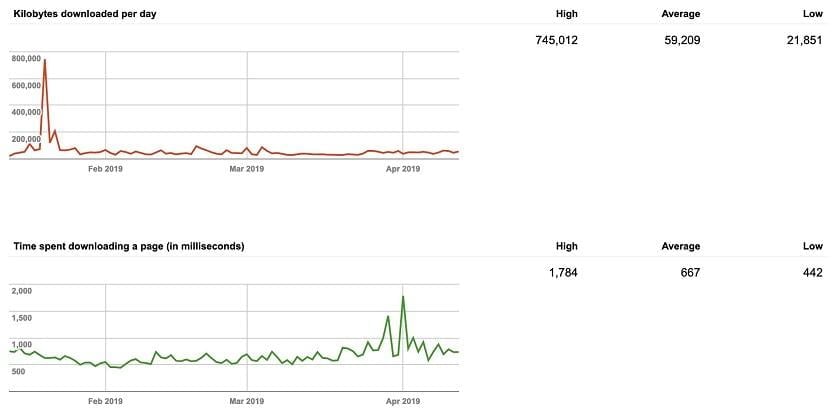

While Google has done a relatively good job of transferring the most important aspects of the old tool into the new Google Search Console, for many digital marketers the new version still offers less functionality than the old one. This is particularly relevant when it comes to technical SEO. At the time of writing, the crawl stats area on the old search console is still viewable and is fundamental to understand how your site is being crawled.

This report shows three main graphs with data from the last 90 days. Pages crawled per day, kilobytes downloaded per day, and time spent downloading a page (in milliseconds) all summarize your website’s crawl rate and relationship with search engine bots. You want your website to almost always have a high crawl rate; this means that your website is visited regularly by search engine bots and indicates a fast and easy-to-crawl website. Consistency is the desired outcome from these graphs—any major fluctuations can point to broken HTML, stale content or your robots.txt file blocking too much on your website. If your time spent downloading a page contains very high numbers, it means Googlebot is spending too long on your website crawling and indexing it slower.

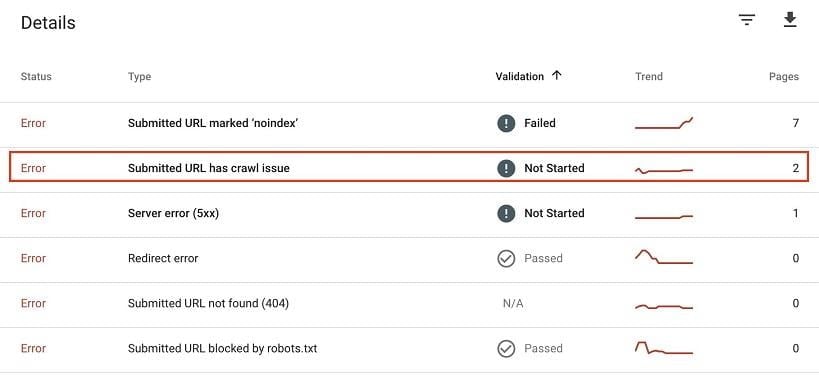

You can see crawl errors in the coverage report in the new Search Console.

Clicking through to these gives highlights specific pages with crawl issues. You’ll want to make sure these pages are not any important ones for your website and address the issue causing it as soon as possible.

If you find significant crawl errors or fluctuations in either the crawl stats or coverage reports, you can look into it further by carrying out a log file analysis. Accessing the raw data from your server logs can be a bit of a pain, and the analysis is very advanced, but it can help you understand exactly what pages can and cannot be crawled, which pages are prioritised, areas of crawl budget waste, and the server responses encountered by bots during their crawl of your website.

Is your site optimized? Get a free SEO audit with our website grader.

2. Check that your site is indexable

Now we’ve analysed whether Googlebot can actually crawl our website, we need to understand whether the pages on our site are being indexed. There are many ways to do this.

1. Search Console coverage report

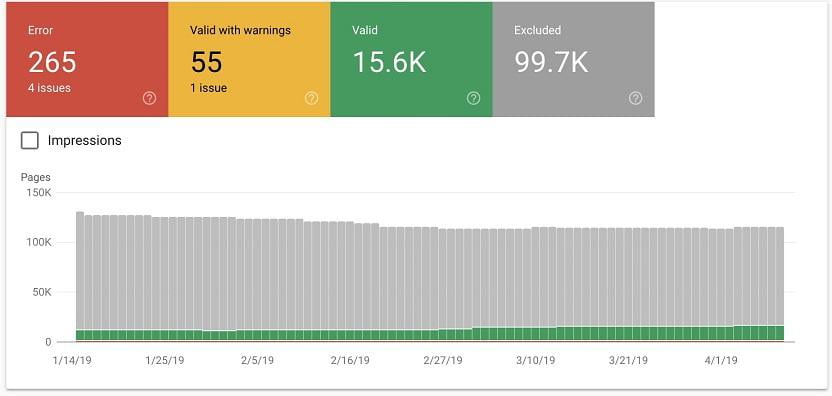

Diving back into the Google Search Console coverage report, we can look at the status of every page of the website.

In this report we can see:

- Errors: Redirect errors, 404s.

- Valid with warnings: Pages that are indexed but with warnings attached to them

- Valid: Pages that are successfully indexed.

- Excluded: Pages that are excluded from being indexed and the reasons for this, such as pages with redirects or blocked by the robots.txt.

You can also analyze specific URLs using the URL inspection tool. Perhaps you want to check a new page you’ve added is indexed or troubleshoot a URL if there has been a drop in traffic to one of your main pages.

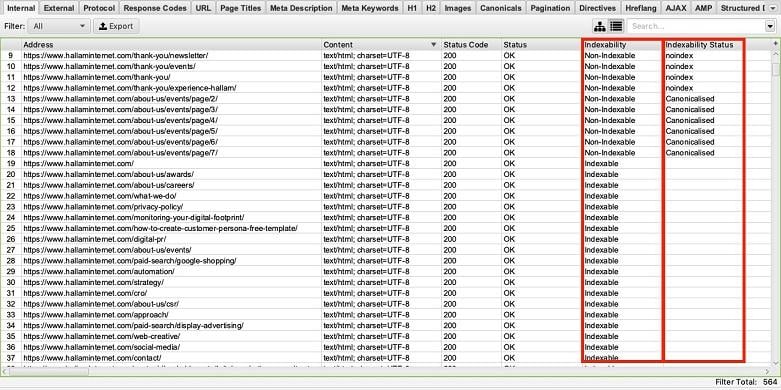

2. Use a crawl tool (like Screaming Frog)

Another good way to check the indexability of your website is to run a crawl. One of the most powerful and versatile pieces of crawling software is Screaming Frog. Depending on the size of your website, you can use the free version which has a crawl limit of 500 URLs, and more limited capabilities; or the paid version which is £149 per year with no crawl limit, greater functionality and APIs available.

Once the crawl has run you can see two columns regarding indexing.

- Indexability: This will indicate whether the URL is “Indexable” or “Non-Indexable.”

- Indexability Status: This will show the reason why a URL is non-indexable. For example, if it’s canonicalised to another URL or has a no-index tag.

This SEO tool is a great way of bulk auditing your site to understand which pages are being indexed and will therefore appear in the search results and which ones are non-indexable. Sort the columns and look for anomalies; using the Google Analytics API is a good way of identifying important pages of which you can check their indexability.

3. Search on Google

Finally, the easiest way of checking how many of your pages are indexed is using the site:domain Google Search parameter. In the search bar, input site:yourdomain and press enter. The search results results will show you every page on your website that has been indexed by Google. Here’s an example:

Here we see that boots.com has around 95,000 URLs indexed. Using this function can give you a good understanding of how many pages Google is currently storing. If you notice a large difference between the number of pages you think you have and the number of pages being indexed, then it is worth investigating further.

- Is the HTTP version of your site still being indexed?

- Do you have duplicate pages indexed that should be canonicalised?

- Are large parts of your website not being indexed that should be?

Using these three techniques, you can build up a good picture of how your site is being indexed by Google and make changes accordingly.

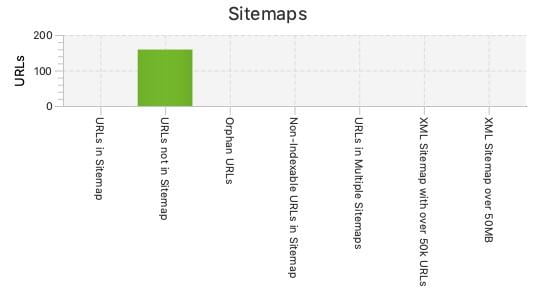

3. Review your sitemap

The importance of a comprehensive and structured sitemap cannot be underestimated when it comes to SEO. Your XML sitemap is a map of your website to Google and other search engine crawlers. Essentially, it helps these crawlers find and rank your website pages.

There are some important elements to consider when it comes to an effective sitemap:

- Your sitemap should be formatted properly in an XML document.

- It should follow XML sitemap protocol.

- Only include canonical versions of URLs.

- Do not include “noindex” URLs.

- Include all new pages when you update or create them.

If you use the Yoast SEO plugin, it can create an XML sitemap for you. If you’re using Screaming Frog, their sitemap analysis is very detailed. You can see the URLs in your sitemap, missing URLs and orphaned URLs.

Make sure your sitemap includes your most important pages, isn’t including pages you don’t want Google to index, and is structured correctly. Once you have done all this, you should resubmit your sitemap to your Google Search Console.

RELATED: Get a website maintenance checklist to keep your site in good shape.

4. Ensure your website is mobile-friendly

Last year Google announced the roll out of mobile-first indexing. This meant that instead of using the desktop versions of the page for ranking and indexing, they would be using the mobile version of your page. This is all part of keeping up with how users are engaging with content online. 52% of global internet traffic now comes from mobile devices so ensuring your website is mobile-friendly is more important than ever.

Google’s Mobile-Friendly Test is a free tool you can use to check if your page is mobile responsive and easy to use. Input your domain, and it will show you how the page is rendered for mobile and indicate whether it is mobile-friendly.

It’s important to manually check your website, too. Use your own phone and navigate across your site, spotting any errors along key conversion pathways of your site. Check all contact forms, phone numbers, and key service pages are functioning correctly. If you’re on desktop, right click and inspect the page.

If you haven’t built your website to be compatible on mobile, then you should address this immediately (see the 2021 update on mobile-first indexing). Many of your competitors will have already considered this and the longer you leave it the further behind you’ll be. Don’t miss out on traffic and potential conversions by leaving it any longer.

For more on this aspect of SEO, head to our post on mobile-first indexing

5. Audit page speed

Page speed is now a ranking factor. Having a site that is fast, responsive and user-friendly is the name of the game for Google in 2019.

You can assess your site’s speed with a whole variety of tools. I’ll cover some of the main ones here and include some recommendations.

Google PageSpeed Insights

Google PageSpeed Insights is another powerful and free Google tool. It gives you a score of “Fast,” “Average,” or “Slow” on both mobile and desktop, and it includes recommendations for improving your page speed.

Test your homepage and core pages to see where your website is coming up short and what you can do to improve your site speed.

It’s important to understand that when digital marketers talk about page speed, we aren’t just referring to how fast the page loads for a person but also how easy and fast it is for search engines to crawl. This is why it’s best practice to minify and bundle your CSS and Javascript files. Don’t rely on just checking how the page looks to the naked eye, use online tools to fully analyse how the page loads for humans and search engines.

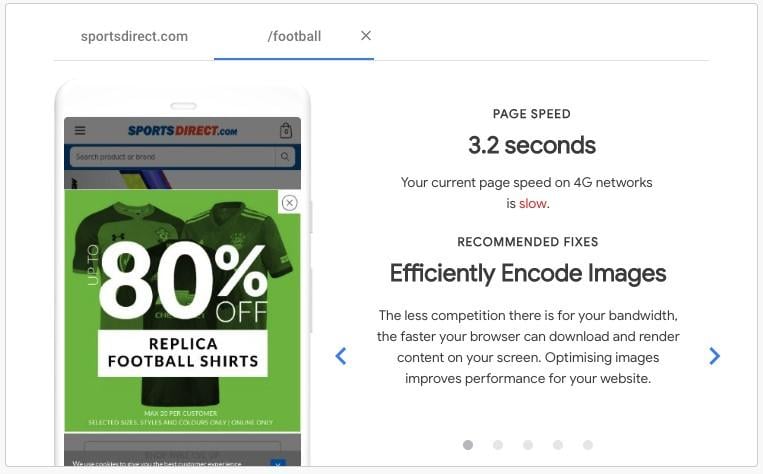

Google has another free tool for site speed focusing on mobile in particular, reinforcing how important mobile site speed is for Google. Test My Site from Google provides you with in-depth analysis on how your website for mobile, including:

How fast your site is on a 3G and 4G connection

It will include your speed in seconds, rating, and whether it is slowing down or speeding up.

Custom fixes for individual pages

Ability to benchmark your site speed against up to 10 competitors

Most importantly, how your revenue is impacted by site speed

This is vital if you own an ecommerce site, because it demonstrates how much potential revenue you are losing from poor mobile site speed and the positive impact small improvements can make to your bottom line.

Conveniently, it can all be summarised in your free, easy to understand report.

Google Analytics

You can also use Google Analytics to see detailed diagnostics of how to improve your site speed. The site speed area in Analytics, found in Behaviour > Site Speed, is packed full of useful data including how specific pages perform in different browsers and countries. You can check this against your page views to make sure you are prioritising your most important pages.

Your page load speed depends on many different factors. But there are some common fixes that you can look at once you’ve done your research, including:

- Optimising your images

- Fix bloated javascript

- Reducing server requests

- Ensure effective caching

- Look at your server, it needs to be quick

- Consider using a Content Delivery Network (CDN)

6. Duplicate content review

Finally, it’s time to look at your website’s duplicate content. As most people in digital marketing know, duplicate content is a big no-no for SEO. While there is no Google penalty for duplicate content, Google doesn’t like multiple copies of the same information. They serve little purpose to the user and Google struggles to understand which page to rank in the SERPs—ultimately meaning it’s more likely to serve one of your competitor’s pages.

There is one quick check you can do using Google search parameters. Enter “info:www.your-domain-name.com”

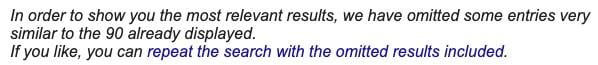

Head to the last page of the search results, if you have duplicate content you may see the following message:

If you have duplicate content showing here, then it’s worth running a crawl using Screaming Frog. You can then sort by Page Title to see if there are any duplicate pages on your site.

Get your technical SEO audit started

These really are the basics of technical SEO, any digital marketer worth their salt will have these fundamentals working for any website they manage. What is really fascinating is how much deeper you can go into technical SEO to find other issues: It may seem daunting but hopefully once you’ve done your first audit, you’ll be keen to see what other improvements you can make to your website. These six steps are a great start for any digital marketer looking to make sure their website is working effectively for search engines. Most importantly, they are all free, so go get started!

About the author

Elliot Haines is an owned media consultant at Hallam. Elliot has experience in creating and executing SEO and Digital PR strategies for both B2B and B2C clients, delivering results across a range of industries including finance, hospitality, travel, and the energy sector.